Towards a New Kind of Statistics for Data Science and Beyond

Source: pixabay.com

In a previous article, I talked about the limitations of Statistics and how they hinder data science work. Naturally, all these shortcomings also make the whole framework of Stats vulnerable to anyone who knows enough to abuse it to promote whatever "facts" that person wishes to propagate. This is not the fault of Statistics professionals (especially those brilliant Mathematicians who lay the foundations of this framework), but rather those who use it without questioning it or without going into any depth. Some people, especially in the newer generations of data scientists, decided to either leave it behind or try to incorporate it with more modern frameworks, such as machine learning, especially deep learning. Although they managed to attain some success, they still haven't addressed any of the limitations mentioned in my article, nor is there any promise of doing so in the foreseeable future. Since dropping Stats altogether would be unwise, to put it mildly, perhaps it’s worth exploring the possibility of fixing it by introducing a new framework that takes the best of both worlds.

Enter BROOM, a quasi-statistical framework, developed in Julia (version 1.6 to be exact), over the past few months. BROOM is a strictly data-driven framework, meaning that it doesn’t assume anything about the data. You may be a novice in data analytics and still be able to use it, as long as you are familiar with its various functions, all of which have comprehensive documentation in the source code files (I haven’t gotten around to creating some online documentation as I’ve been focusing on making it scalable and bug-free). Even if you don’t know much about Statistics (an introductory course would be more than enough), you can still make use of this framework to tackle your data analytics and data engineering tasks. If you were to combine it with some data models, e.g. a decision tree ensemble or any other somewhat transparent model, you can even guarantee end-to-end transparency (e2et) in your work.

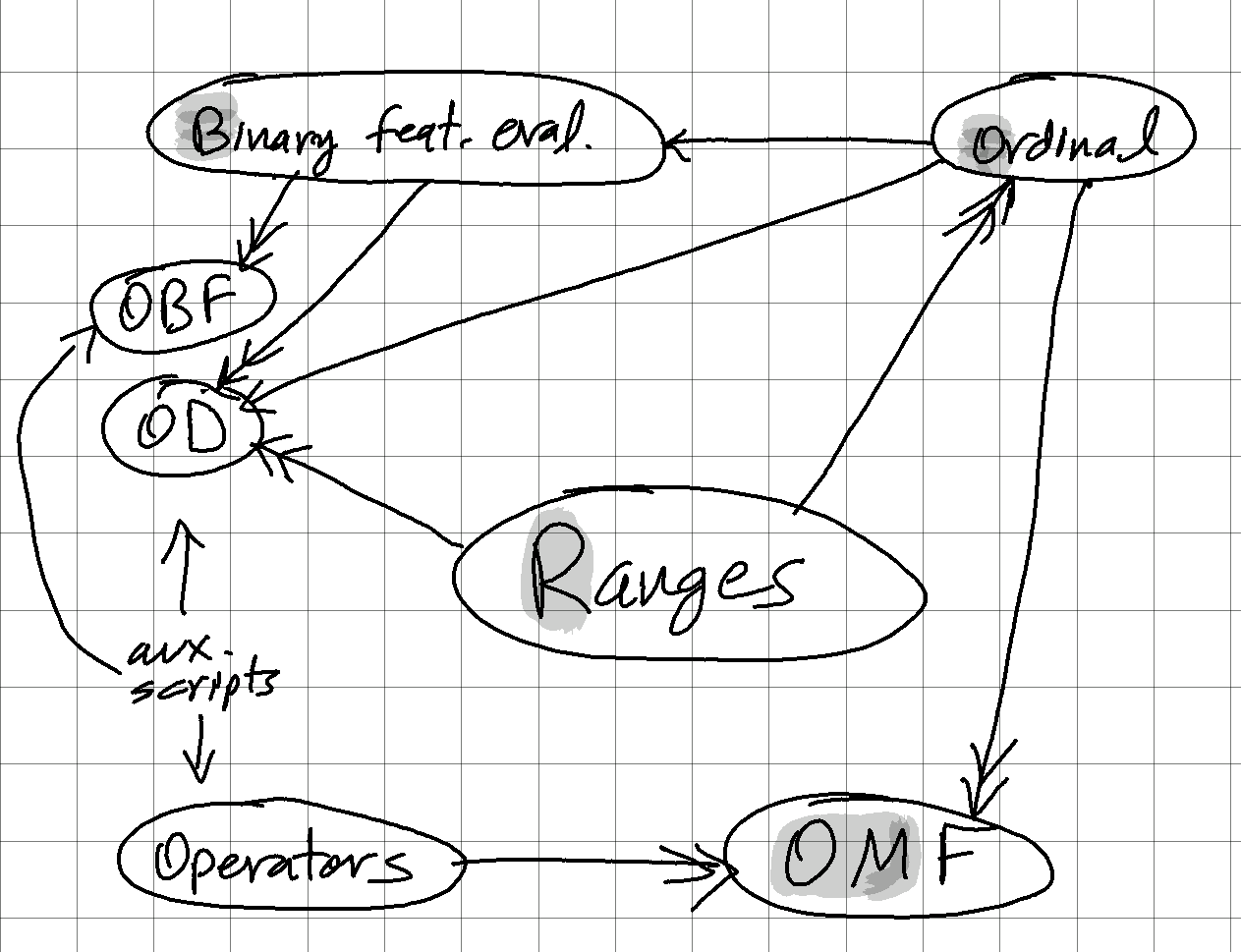

BROOM comprises four core scripts that tackle specific tasks and include several functions each. These are the following:

- Binary feature evaluation – As the name suggests, it deals with analyzing binary features/variables and helping us makes sense as to whether they are useful for our models or not. This script includes a few evaluation metrics based on the Jaccard similarity, the most useful of which is the holistic symmetric similarity metric (HSS) which is generalized for nominal features too.

- Ranges of continuous variables – As the range of a variable in Statistics is poorly defined and implemented (making it a serious liability if outliers are present), this script provides a series of range-related metrics as well as a variety of methods and metrics deriving from the best one of these ranges. This script is probably the most fundamental of all those in this framework as it also includes methods for identifying and handling outliers, as well as a test for comparing a data point to a (continuous) variable to establish membership and an understanding of the (real) distribution of the data. The more advanced functions of this script make use of the fundamental Fuzzy Logic concept of membership.

- Ordinal features – Ordinal features have been greatly underrated. This script views them as the most important thing in the world and establishes various ways to analyze them, as well as methods for turning a continuous variable into an ordinal one, based on the optimal number of bins method from the ranges script. Also, using some simple heuristics, this script explores how two continuous variables can be analyzed for correlation, by turning them into ordinal variables first. This way, any non-linearities are handled properly, transcending the shallow view of correlation provided by the Pearson Correlation metric. This script also contains two-sample tests, based on the median.

- Optimal Meta-features based on continuous variables (OM) – This script explores how you can combine various continuous features optimally, using the ordinal script, as well as a series of operators (see operators script below). The idea is to build a model that's simple enough to be robust, but sophisticated enough to take into account the non-linear relationships of the original features to the target variable. This is the simplest of all the core scripts as it relies heavily on the others.

Beyond these core scripts, there are three more, which are auxiliary and either derive from them or are used elsewhere in my data science ecosystem. These auxiliary scripts are:

- Optimal Binary Fusion (OBF) – This script involves a couple of methods for merging or fusing binary features. It makes use of the holistic symmetric similarity (HSS) metric defined in the binary feature evaluation script. The OBF script also makes use of five fundamental binary operators for a pair of binary variables, to generate new binary features that have a stronger relationship with the target variable (also a binary feature). This is the last script developed for this framework.

- Optimal Discretization of a continuous variable (OD) – Since Stats does a terrible job at discretizing variables in meaningful ways, this script attempts to address this. Namely, it provides three different strategies for binarizing and for discretizing a given continuous variable: one using the variable on its own and two using the variable in relation to another variable (target). The OD script relies heavily on all the core BROOM scripts, except the OM one, and is essential for ectropy (not entropy) calculations.

- Operators – The OM script makes use of a series of operators for combining a pair of continuous variables. All these operators live in this script, which has also been used in a different project previously. The operators script is quite extensive though only a subset of the operators considers make it to the final cut of functions implementing operators.

So, regardless of what kind of data you have, you can make use of BROOM to clean it up and make it ready for your model. The latter can be a predictive model (e.g., a classifier) or a descriptive one (e.g., a clustering system). BROOM can help you pinpoint the best variables to use as features and prepare them so that they can be ready to use in your model (i.e., make them actual features or meta-features).

Although this is not an open project, I welcome anyone willing to contribute to it. The only prerequisites are:

- Good command of the Julia language

- Solid understanding of Data Science or Data Analytics

- No affiliation with a larger organization that may use this as its IP

- Willingness to work remotely via a cloud platform

Cheers!

Articoli di Zacharias 🐝 Voulgaris

Visualizza il blog

Not-so-technical intro · Anyone who has delved into computers has heard and probably experienced pro ...

Introducción no tan técnica · Cualquiera que se haya adentrado en el mundo de la informática ha oído ...

I've never had any serious issues with my digestive system, but it doesn't hurt to be prepared. Afte ...

Professionisti correlati

Potresti essere interessato a questi lavori

-

Customer Data Science

Trovato in: Talent IT C2 - 4 giorni fa

PwC Milan, Italia A tempo pienoDescription · & SummaryVuoi essere al centro di prospettive di crescita uniche? · Entra nel team Customer Transformation di PwC e diventa: Customer Data Science & AI Manager · Gestirai sfidanti progetti di Data Science, AI e Customer Advance Analytics in ambito Retail, Fashion ...

-

Thermo-Hydraulic Technician

Trovato in: Buscojobs IT C2 - 3 settimane fa

Idro-Service Srl Firenze, Italia A tempo pienoCompany Description : · We are a leading company in the plumbing and heating industry, committed to offering innovative, high-quality solutions for our customers' needs. We implement an approach oriented towards the professional growth of our employees, offering opportunities fo ...

-

Elastic Data Engineering and Architecture Senior Specialist

Trovato in: Talent IT C2 - 2 giorni fa

IGT Rome, ItaliaIGT (NYSE:IGT) is a global leader in gaming. We deliver entertaining and responsible gaming experiences for players across all channels and regulated segments, from Lotteries and Gaming Machines to Sports Betting and Digital. Leveraging a wealth of compelling content, substantial ...

Commenti

Zacharias 🐝 Voulgaris

2 anni fa #1

Here is the link to the article mentioned previously: https://us.bebee.com/producer/statistics-shortcomings-x3kdrWSMrESD